Breaking: OpenAI Alums, Nobel Laureates Urge Regulators to Save Company's Nonprofit Structure

Converting to a for-profit model would undermine the company's founding mission to ensure AGI "benefits all of humanity," argues new letter

Don’t become a for-profit.

That’s the blunt message of a recent letter signed by more than 30 people, including former OpenAI employees, prominent civil-society leaders, legal scholars, and Nobel laureates, including AI pioneer Geoffrey Hinton and former World Bank chief economist Joseph Stiglitz.

Obsolete obtained the 25-page letter, which was sent last Thursday to the attorneys general (AGs) of California and Delaware, two officials with the power to block the deal.

Made public early Wednesday, the letter argues that OpenAI's proposed transformation from a nonprofit-controlled entity into a for-profit public benefit corporation (PBC) would fundamentally betray the organization's founding mission and could even be unlawful — placing the power and responsibility to intervene squarely with the state AGs.

OpenAI and the offices of the California and Delaware attorneys general did not reply to requests for comment.

The letter was primarily authored by Page Hedley, a lawyer who worked at OpenAI from 2017-2018 and recently left an AI policy role at Longview Philanthropy; Sunny Gandhi, political director of Encode AI; and Tyler Whitmer, founder and president of Legal Advocates for Safe Science and Technology.

(Encode AI receives funding from the Omidyar Network, where I am currently a Reporter in Residence, and I worked as a media consultant for Longview Philanthropy in 2022.)

Nonprofit origins

In 2015, OpenAI’s founders established it as a nonprofit research lab as a counter to for-profit AI companies like Google DeepMind. When executives felt they couldn't raise enough capital to compete at AI's increasingly expensive cutting edge, they launched a for-profit in 2019 that was ultimately controlled by the nonprofit. This unusual corporate structure was designed to keep the organization loyal to its mission: to ensure that artificial general intelligence (AGI) benefits all of humanity. AGI is defined in the OpenAI Charter as "a highly autonomous system that outperforms humans at most economically valuable work."

In the years since this structure was established, OpenAI has taken on tens of billions in investment, reached a $300 billion valuation, and arguably become the world's leading AI company.

But now, despite years of insisting that nonprofit governance was essential to its mission, OpenAI wants to abandon it — and adopt a more conventional corporate structure that prioritizes shareholder returns.

OpenAI has raised over $46 billion since October and has reportedly given investors the ability to ask for most of it back if the restructuring isn't completed by certain deadlines, with the earliest hitting by the end of this year.

"OpenAI may one day build technology that could get us all killed," writes former employee Nisan Stiennon in a supplemental statement. "It is to OpenAI's credit that it's controlled by a nonprofit with a duty to humanity. This duty precludes giving up that control."

In the letter, the authors argue that the AGs should investigate the conversion, prevent it from moving forward as planned, and work to ensure the OpenAI nonprofit board is sufficiently empowered, informed, independent, and willing to stand up to company management. If the board doesn't meet these requirements, the AGs should intervene, potentially going so far as to remove directors and appoint an independent oversight body, the letter suggests.

"The proposed restructuring would eliminate essential safeguards," the letter states, "effectively handing control of, and profits from, what could be the most powerful technology ever created to a for-profit entity with legal duties to prioritize shareholder returns."

This letter is the latest in a series of high profile challenges to the OpenAI restructuring. Cofounder Elon Musk is suing to block it, with formal support from former employees, civil society groups, and Meta. Musk also offered to buy the nonprofit's assets for $97.4 billion, in a likely effort to bid up the price the new for-profit has to pay for control or disrupt the transformation entirely.

And earlier this month, a separate coalition of over 50 California nonprofit leaders filed a petition with AG Rob Bonta's office, urging him to ensure that the nonprofit is adequately compensated for what it's giving up in the transition.

However, this new letter differs from past efforts by asking the AGs to pursue an intervention separate from Musk's suit and by arguing that the restructuring shouldn't move forward — no matter how much OpenAI's nonprofit gets compensated.

Nobel opposition

Three Nobel laureates feature prominently among the signatories: AI pioneer Geoffrey Hinton, and economists Oliver Hart and Joseph Stiglitz.

Hinton is the second most-cited living scientist, and his pioneering work in the field of deep learning earned him the 2024 Nobel Prize in Physics. In May 2023, Hinton resigned from his job at Google to freely warn that advanced AI could wipe out humanity.

In a supplemental statement, Hinton praised OpenAI’s commitment to ensuring that AGI helps humanity. “I would like them to execute that mission instead of enriching their investors. I’m happy there is an effort to hold OpenAI to its mission that does not involve Elon Musk,” he wrote. Hart, an economics Nobel winner for his work in contact theory in 2016, was more blunt in his supplemental statement: “The proposed governance change is dangerous and should be resisted."

Contradictions

The letter compiles a damning collection of statements from OpenAI's leadership that directly contradict its current push to abandon nonprofit control. These quotes paint a picture of an organization that chose its unique structure to safeguard humanity, and whose leaders repeatedly emphasized this commitment as central to their mission.

Back in 2015, Sam Altman emailed Elon Musk proposing a structure where the tech “belongs to the world via some sort of nonprofit,” adding that OpenAI would “aggressively support all regulation.” (Since then, OpenAI has lobbied to weaken or kill several AI laws.)

In 2017, Altman told an audience in London: “We don’t ever want to be making decisions to benefit shareholders. The only people we want to be accountable to is humanity as a whole.”

In 2019, president Greg Brockman clarified something subtle but important: “The true mission isn’t for OpenAI to build AGI. The true mission is for AGI to go well for humanity... our goal is to make sure it goes well for the world.”

Altman made clear that some money was not worth making: “There are things we wouldn't be willing to do no matter how much money they made,” he told Kara Swisher in 2018, “and we made this public so the public would hold us accountable.”

And in a 2020 interview, Altman warned that if OpenAI succeeded at building AGI, it might “capture the light cone of all future value in the universe.” That, he said, “is for sure not okay for one group of investors to have.”

(The light cone refers to all of the universe that earth-originating life could theoretically affect, to give you a sense of Altman's ambitions.)

This wasn’t just rhetoric. When OpenAI launched its for-profit arm in 2019, it promised that all employees and investors would sign contracts putting the nonprofit Charter first — “even at the expense of some or all of their financial stake.” The for-profit announcement also included the assurance: "Regardless of how the world evolves, we are committed — legally and personally — to our mission."

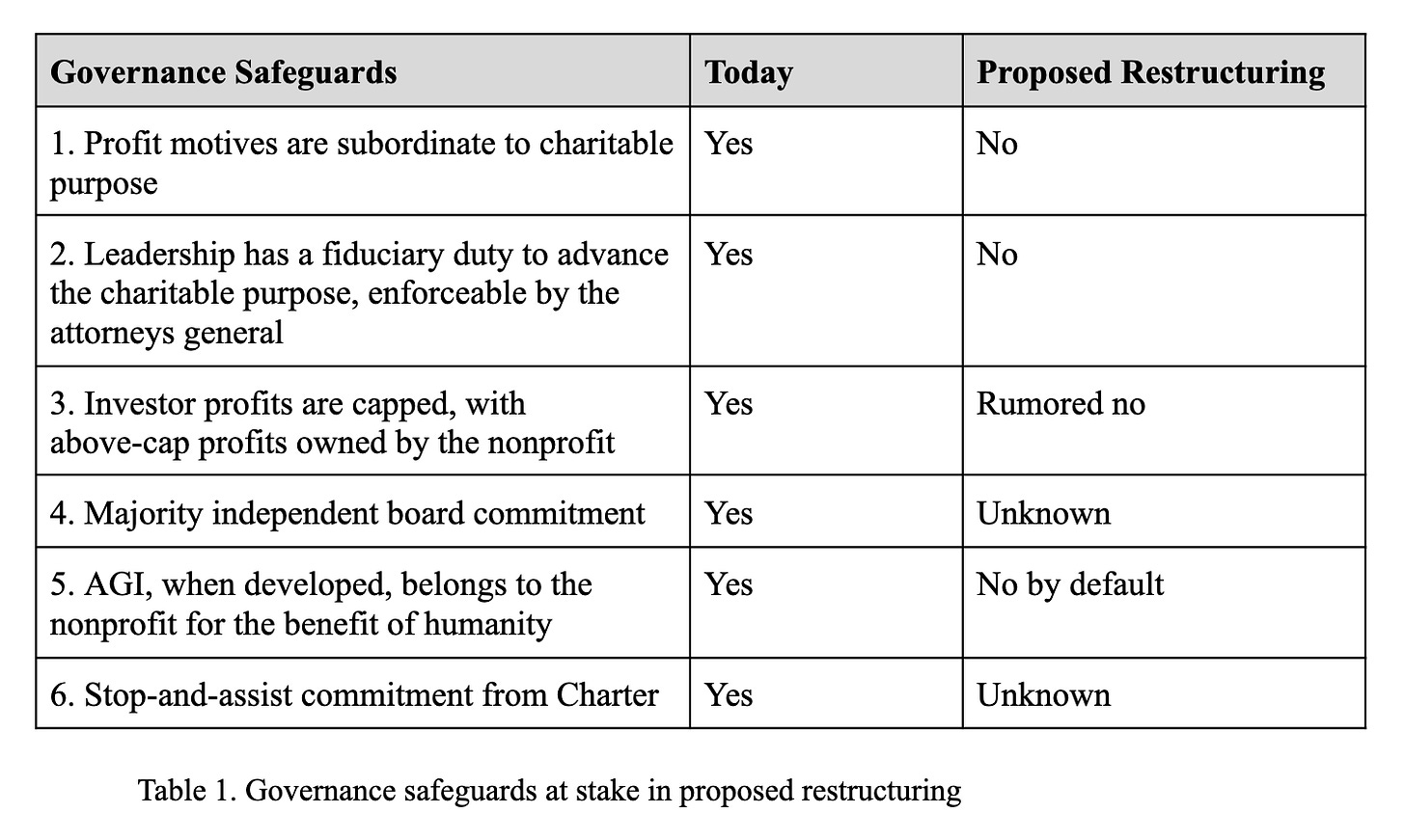

In his 2023 Congressional testimony, Altman highlighted key safeguards in OpenAI's "unusual structure" that keeps it mission-focused: nonprofit control over the for-profit subsidiary, fiduciary duties to humanity rather than investors, a majority-independent board with no equity stakes, capped investor profits with residual value flowing to the nonprofit, and explicit reservation of AGI technologies for nonprofit governance.

The letter then warns that, "These safeguards are now in jeopardy under the proposed restructuring," and goes through the status of each under the proposed restructuring.

Justifications

Taken together, these statements leave little room for interpretation. OpenAI deliberately chose a nonprofit-controlled structure with the explicit goal of safeguarding humanity from profit-driven AGI development — the very protection it now seeks to dismantle.

The letter surgically dissects OpenAI's justifications for abandoning its nonprofit governance structure, arguing they prioritize commercial competitiveness over the organization's core charitable mission.

OpenAI's primary rationale, stated in recent court filings, is that its unusual structure makes it harder to attract investment and talent. But the letter authors counter that this is precisely the point — OpenAI was specifically designed to operate differently.

"Competitive advantage might be a relevant factor, but it is not a sufficient reason to restructure,” the letter argues. "OpenAI's charitable purpose is not to make money or capture market share." It points out that OpenAI's current structure intentionally accepts certain competitive disadvantages as the cost of prioritizing humanity's interests over profits.

These disadvantages might be overrated, suggests signatory Luigi Zingales of the University of Chicago Booth School of Business in his supplemental statement:

The current structure, which caps returns at 100x the capital invested, does not really constrain its ability to raise funds. So, what is the need to transfer the control to a for-profit? To overrule the mandate that AI should be used for the benefit of humanity.

These profit caps were designed to ensure that OpenAI could redistribute exorbitant returns in a world where the company actually builds AGI and puts much of the world out of work. They're one of the things reported to be on the chopping block as part of the proposed restructuring.

The letter authors write:

OpenAI might respond that a competitive advantage inherently advances its mission, but that argument is an implicit comparison of OpenAI and its competitors: that humanity would be better off if OpenAI builds AGI before competing companies. Based on OpenAI’s recent track record, this argument is unlikely to be convincing…

They then list reports of OpenAI's broken promises, rushed safety testing, doublespeak and hypocrisy, and coercive non-disparagement agreements.

Moreover, the letter highlights that OpenAI has not adequately explained why removing nonprofit control is necessary to address its stated issues. While the company claims investors demand simplification of its "capital structure," it fails to demonstrate why this requires eliminating nonprofit oversight rather than more targeted adjustments.

The authors conclude that OpenAI's plans for "one of the best resourced non-profits in history" miss the point entirely. This isn’t about building a well-funded foundation for generic good works — it’s about retaining governance over AGI itself. The letter bluntly states that OpenAI "should not be permitted to sell out its mission."

"No sale price can compensate"

OpenAI claims the restructuring would create "one of the best resourced nonprofits in history" that would "pursue charitable initiatives in sectors such as health care, education, and science."

But the letter's authors argue this would mark a fundamental shift in OpenAI’s charitable purpose — one that, under nonprofit law, can only be altered in exceptional circumstances.

Perhaps the most distinctive argument in the letter is that, given OpenAI's mission to ensure that AGI benefits all of humanity, no sale price could adequately compensate the nonprofit for what it would be giving up — control over the company that is arguably closest to building AGI.

The authors essentially say: this isn’t a debate about fair market value. The whole premise of the nonprofit is that AGI governance isn’t something you can price — and that no board serving the public could ever justify giving it up for cash, no matter the number.

Actually, I can think of one outcome that could potentially satisfy this condition. As strong a claim as OpenAI has to leadership of the AI industry, it's only one company. If it slows down for the sake of safety, others could overtake it. So perhaps the OpenAI nonprofit would better advance its mission if it were spun out into a truly independent entity with $150 billion and the mission to lobby for binding domestic and international safeguards on advanced AI systems.

If this sounds far-fetched, then so should the idea that the nonprofit board that initiated this conversion is genuinely representing the public interest.

An institutional test

The question of whether this restructuring is legal is now before two state AGs. But whether it goes forward may ultimately come down to politics. California and Delaware's AGs may be officers of the law, but they're also elected by the people.

Delaware AG Kathleen Jennings has already publicly stated that her office is investigating the proposal, and CalMatters reported in January that California AG Rob Bonta's office was doing the same.

And in a letter sent last week to Musk's legal team, Bonta's office denied Musk's request for "relator status," which would have allowed him to sue OpenAI in the name of the State of California. The AG's office specifically noted that Musk appeared to have personal and financial interests in OpenAI's assets through his competing company, xAI.

Musk's suit makes legal arguments, but can't be fully separated from the man himself, whose far-right turn has made him politically toxic in Democratic circles (both the AGs in question are Democrats in deep blue states).

By rejecting Musk's bid to insert himself as California's representative, Bonta signaled that his office intends to maintain direct control over any potential enforcement actions regarding OpenAI's charitable purpose.

The letter’s authors essentially argue that this moment is a test — not just for OpenAI’s board, but for the institutions meant to safeguard the public interest. The attorneys general of California and Delaware have both indicated they’re paying attention.

Whether they act may determine if OpenAI’s fiduciary duty to humanity is a real constraint — or just another marketing line sacrificed to investor pressure.

Edited by Sid Mahanta.

Appendix: Quotes from OpenAI’s leaders over the years

The letter includes some great quotes from Altman and Brockman — some of which I hadn’t actually seen before. I’m including them here with links for convenience.

Sam Altman in 2017: "That’s why we’re a nonprofit: we don’t ever want to be making decisions to benefit shareholders. The only people we want to be accountable to is humanity as a whole."

Greg Brockman in 2019: "The true mission isn’t for OpenAI to build AGI. The true mission is for AGI to go well for humanity… our goal isn’t to be the ones to build it, our goal is to make sure it goes well for the world."

Altman in 2020: "The problem with AGI specifically is that if we’re successful, and we tried, maybe we could capture the light cone of all future value in the universe. And that is for sure not okay for one group of investors to have."

Altman in a 2015 email to Musk: "we could structure it so that the tech belongs to the world via some sort of nonprofit but the people working on it get startup-like compensation if it works. Obviously we’d comply with/aggressively support all regulation"

We’ve designed OpenAI LP to put our overall mission—ensuring the creation and adoption of safe and beneficial AGI—ahead of generating returns for investors… Regardless of how the world evolves, we are committed—legally and personally—to our mission.

…

All investors and employees sign agreements that OpenAI LP’s obligation to the Charter always comes first, even at the expense of some or all of their financial stake.

Altman on the hybrid model in 2022 (at 35:16):

We wanted to preserve as much as we could of the specialness of the nonprofit approach, the benefit sharing, the governance, what I consider maybe to be most important of all, which is the safety features and incentives.

Brockman before House subcommittee hearing in 2018:

On the ethical front, that’s really core to my organization. That’s the reason we exist . . . when it comes to the benefits of who owns this technology? Who gets it? You know, where did the dollars go? We think it belongs to everyone.

At OpenAI when we wrote our charter, we talked about the scenarios where we would or wouldn’t make money. And… the things we wouldn’t be willing to do no matter how much money they made. And we made this public so the public would hold us accountable to that. And I think that’s really important.

Given Sam Altman's connections with the Trump Administration, can we count on rule of law occurring here though? The Trump Administration is when a lot of AI regulation and governance won't adequately protect humanity from tycoon capitalism and its potential abuses especially in how changes take place in the DoD and with regards to the future of military robots and automation.